Previously I discussed the prime number theorem and proved Chebyshev’s weak form of it, namely that $\pi(n)=\Theta(n/\ln n)$. Chebyshev was also able to show the conditional result that if

\[ \lim_{n\to\infty}\frac{\pi(n)}{n/\ln n} \]

exists, then its value is $1$. While this might seem to be quite close to the prime number theorem, there is no especially good reason why the limit should exist. In fact, in a sense this result makes the limit less likely to exist; after our previous proof all one knows is that the limit lies between $0.34$ and $4.16$ (supposing it exists), but now it can have only one possible value!

Some new functions

Before proving this result, it is useful to introduce the von Mangoldt function defined by

\[ \Lambda(n) := \begin{cases}

\ln p & \text{if $n\geq2$ is a perfect power of the prime $p$} \\

0 & \text{otherwise}

\end{cases} \]

and the Chebyshev function defined as the partial sums of the von Mangoldt function,

\[ \psi(n) := \sum_{m\leq n}\Lambda(m) . \]

For each prime $p$ there are $\lfloor\log_p n\rfloor$ perfect powers of $p$ less than or equal to $n$ and strictly greater than $1$, so an alternate way of writing this function is

\[ \psi(n) = \sum_{p\leq n}\lfloor\log_p n\rfloor\ln p . \]

Upper bound on $\psi(n)$

By removing the floor and using the logarithm change-of-base formula one gets an upper bound on $\psi(n)$:

\[ \psi(n) \leq \sum_{p\leq n}(\log_p n)\ln p = \sum_{p\leq n}\ln n = \pi(n)\ln n \]

In particular, using the previously established $\pi(n)=O(n/\ln n)$ this implies

\[ \psi(n) = O(n) . \tag{1} \]

Lower bound on $\psi(n)$

By removing every term in the sum defining $\psi(n)$ except when $m$ is a prime larger than $n’$, and using $\pi(n’)=O(n’/\ln n’)$, one gets a similar lower bound on $\psi(n)$:

\begin{align*}

\psi(n) &\geq \sum_{n'<p\leq n}\ln p \\

&\geq \sum_{n'<p\leq n}\ln n’ \\

&= \ln n’\bigl(\pi(n)-\pi(n’)\bigr) \\

&= \pi(n)\ln n’ + O(n’)

\end{align*}

Now, this holds for all $n'<n$, so take $n’:=n/\ln n$ (it is actually not necessary to ensure that $n’$ is an integer). Then the lower bound becomes

\[ \psi(n) \geq \pi(n)(\ln n-\ln\ln n) + O\Bigl(\frac{n}{\ln n}\Bigr) = \pi(n)\ln(n) + O\Bigl(\frac{n\ln\ln n}{\ln n}\Bigr) . \]

Combining the bounds on $\psi(n)$

Since the error term here is $o(n)$, combining with the upper bound $\psi(n)\leq\pi(n)\ln n$ we get the nice relation

\[ \psi(n) = \pi(n)\ln n + o(n) . \]

Dividing by $n$, we find that

\[ \frac{\psi(n)}{n} = \frac{\pi(n)}{n/\ln n} + o(1) . \tag{2} \]

This is a very strong asymptotic relation between $\psi(n)/n$ and $\pi(n)/(n/\ln n)$; it says that the difference between these functions goes to zero as $n$ increases. So as $n$ gets large, the functions essentially become identical.

The first estimation of $\ln(n!)$

The proof now proceeds by estimating the quantity $\ln(n!)$ in two different ways. The first way is a weak form of Stirling’s approximation:

\[ \ln(n!) = n \ln n + O(n) \tag{3} \]

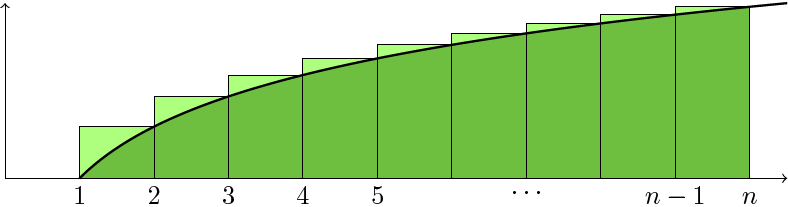

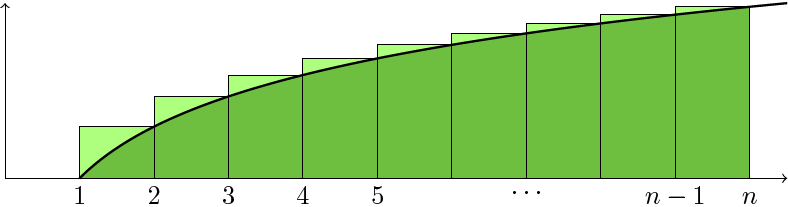

A simple proof of this proceeds by estimating $\newcommand{\d}{\,\mathrm{d}}\int_1^n\ln t\d t$ by rectangles of width $1$:

Note that $\ln(n!)=\sum_{m=1}^\infty \ln m$, so the area formed by the rectangles is exactly the quantity to estimate in (3).

As shown in the diagram, this quantity is closely under-estimated by $\int_1^n\ln t\d t$ (the dark green area); the amount of under-estimation is given by the light green area. Although this is difficult to evaluate exactly, by sliding the light green triangle-like shapes to the right they all fit inside the final rectangle, which has area $\ln n$. Thus the dark green area under-estimates the area of the rectangles by at most $\ln n$, and we have

\begin{align*}

\ln(n!) &= \int_1^n\ln t\d t + O(\ln n) \\

&= \bigl[t\ln n – t\bigr]_1^n + O(\ln n) \\

&= n\ln n \mathbin- n + O(\ln n)

\end{align*}

which is a stronger form of (3).

The second estimation of $\ln(n!)$

The second method of estimation relies on the prime factorization of $n!$. Recall we had already determined the exponent of $p$ in the prime factorization (also known as the $p$-adic order) of $n!$ to be $\nu_p(n!) := \sum_{k=1}^\infty\lfloor n/p^k\rfloor$. Using this, we have:

\begin{align*}

\ln(n!) &= \ln\Bigl(\prod_{p\leq n}p^{\nu_p(n!)}\Bigr) \\

&= \sum_{p\leq n}\nu_p(n!)\ln p \\

&= \sum_{p^k\leq n}\biggl\lfloor\frac{n}{p^k}\biggr\rfloor\ln p \\

&= \sum_{m\leq n}\Bigl\lfloor\frac{n}{m}\Bigr\rfloor\Lambda(m) \\

&= \sum_{m\leq n}\Bigl(\frac{n}{m} + O(1)\Bigl)\Lambda(m) \\

&= n\sum_{m\leq n}\frac{\Lambda(m)}{m} + O\bigl(\psi(n)\bigr)

\end{align*}

We can evaluate $\sum_{m\leq n}\Lambda(m)/m$ using a trick known as Abel’s summation formula:

\begin{align*}

\sum_{m\leq n}\frac{\Lambda(m)}{m} &= \sum_{m\leq n}\frac{\psi(m)-\psi(m-1)}{m} \\

&= \sum_{m\leq n}\frac{\psi(m)}{m} \mathbin- \sum_{m\leq n-1}\frac{\psi(m)}{m+1} \\

&= \sum_{m\leq n-1}\psi(m)\Bigl(\frac{1}{m}-\frac{1}{m+1}\Bigr) + \frac{\psi(n)}{n} \\

&= \sum_{m\leq n-1}\psi(m)\Bigl[-\frac{1}{t}\Bigr]_m^{m+1} + \frac{\psi(n)}{n} \\

%&= \sum_{m\leq n-1}\psi(m)\int_m^{m+1}\frac{\!\d t}{t^2} + \frac{\psi(n)}{n} \\

&= \sum_{m\leq n-1}\int_m^{m+1}\frac{\psi(t)}{t^2}\!\d t + \frac{\psi(n)}{n} \\

&= \int_1^n\frac{\psi(t)}{t^2}\!\d t + \frac{\psi(n)}{n}

\end{align*}

Most of this is just algebra; the fact that $\psi(m)=\psi(t)$ for $t\in[m,m+1)$ justifies pulling $\psi(m)$ into the integral. Putting these together and using (1), we get our second estimate on $\ln(n!)$:

\[ \ln(n!) = n\int_1^n\frac{\psi(t)}{t^2}\!\d t + O(n) \tag{4} \]

Putting the estimates together

Combining (3) and (4) and dividing by $n$ we find that

\[ \int_1^n\frac{\psi(t)}{t^2}\!\d t = \ln n + O(1) . \tag{5} \]

Using (5) to derive the result

Suppose that the limit in question exists and equals $1+\epsilon$ for some $\epsilon>0$. Then, taking the limit as $n\to\infty$ in (2), we have

\[ \lim_{n\to\infty}\frac{\psi(n)}{n} = \lim_{n\to\infty}\frac{\pi(n)}{n/\ln n} = 1 + \epsilon . \]

It follows that there is some $N$ such that $\psi(t)/t\geq 1+\epsilon/2$ for all $t\geq N$. Now, $N$ only depends on the constant $\epsilon$, so taking $N$ fixed as $n\to\infty$ we have

\[ \int_1^n\frac{\psi(t)}{t^2}\d t \geq \int_N^n\frac{\psi(t)}{t^2}\d t \geq \int_N^n\frac{1+\epsilon/2}{t}\d t = \Bigl(1+\frac{\epsilon}{2}\Bigr)\ln n + O(1) . \]

This contradicts (5), since the coefficient on the leading term $\ln n$ is larger than $1$; for example, this implies that $\int_1^n\psi(t)/t^2\d t-\ln n=\Omega(\ln n)$, which is not $O(1)$ as given by (5).

Similarly, if the limit in question exists and equals $1-\epsilon$ for some $\epsilon>0$ then we have $\lim_{n\to\infty}\psi(n)/n = 1-\epsilon$, so there exists some $N$ such that $\psi(t)/t\leq1-\epsilon/2$ for all $t\geq N$. Then as $n\to\infty$ we have

\[ \int_1^n\frac{\psi(t)}{t^2}\d t \leq O(1) + \int_N^n\frac{1-\epsilon/2}{t}\d t = \Bigl(1-\frac{\epsilon}{2}\Bigr)\ln n + O(1) , \]

which contradicts (5), since this says that the coefficient on the leading term $\ln n$ is less than $1$.

Therefore, we conclude that if $\lim_{n\to\infty}\pi(n)/(n/\ln n)$ exists it cannot be strictly larger than or strictly less than $1$, so it must be exactly $1$.