In a previous post, I described a way of encoding a lexicographic ordering constraint into SAT—in other words, a method of deriving a formula in Boolean logic expressed in conjunctive normal form which is true exactly when the lexicographic constraint $[x_1,\dotsc,x_n]\leq[y_1,\dotsc,y_n]$ is true given that the $x_i$ and $y_i$ variables are either 1 (true) or 0 (false).

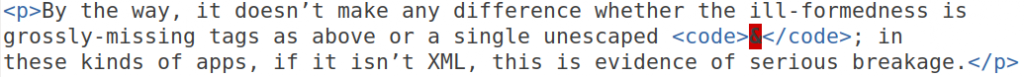

In this post, I will describe the “totalizer” SAT encoding of the constraint

\[ l \leq x_1+\dotsb+x_n \leq u \tag{$*$} \]

where the $x_i$ are Boolean variables (associated with 0 or 1) and $l$ and $u$ are fixed integers with $0\leq l\leq u\leq n$. This encoding was first proposed by Bailleux and Boufkhad in the 2003 paper Efficient CNF Encoding of Boolean Cardinality Constraints and has since become widely used. For example, it is outlined in the second paragraph on page 192 of Knuth’s The Art of Computer Programming volume 4B.

The idea behind the totalizer encoding for $(*)$ is to use a set of $n$ “totalizer” variables $r_1$, $\dotsc$, $r_n$ which essentially encode the value of the sum $x_1+\dotsb+x_n$ in unary. Ultimately, if exactly $m$ of the variables $x_1$, $\dotsc$, $x_n$ are true, then each of $r_1$, $\dotsc$, $r_m$ will be assigned true and each of $r_{m+1}$, $\dotsc$, $r_n$ will be assigned false. New auxiliary “linking” variables will be used in the process of ensuring that the $r_i$s will be assigned to their proper values.

The linking variables will be structured in the format of a binary tree in which the variables $r_1$, $\dotsc$, $r_n$ are in the root of the tree and the input variables $x_1$, $\dotsc$, $x_n$ are in the leaves of the tree. The tree will have $n$ leaves, with each leaf corresponding to a single one of the input variables on which the cardinality constraint $(*)$ is defined. Internal tree nodes will contain variables encoding (in unary) the sum of the input variables appearing in the subtree rooted at that internal node. Finally, in order to balance the tree, for every internal tree node the number of input variables in the left and right subtrees will be taken to be as close as possible. Concretely, when there are $k$ leaves descended from an internal node the left child will contain $\lfloor k/2\rfloor$ leaves and the right child will contain $k-\lfloor k/2\rfloor$ leaves.

The following diagram shows the structure of how the variables are linked for the case when there are eight input variables $x_1$, $\dotsc$, $x_8$.

In this diagram the green boxes denote true variables and the red boxes denote false variables. The figure shows how the values of the linking variables will be assigned when the input variables $x_3$, $x_5$, and $x_8$ are false and the other input variables are true. The structure here strongly resembles the structure of what happens during mergesort, and indeed the process can be viewed as sorting the Boolean array $[x_1,\dotsc,x_n]$ in descending order with the sorted array being ultimately stored in $[r_1,\dotsc,r_n]$.

So far the idea is pretty intuitive, but how can we encode the “merging” process in SAT? We don’t have the luxury of implementing the merge step using a loop or something like that—we need to solve the problem declaratively via clauses logically stating how the values of the variables in a given node depend on the values of the variables in that node’s children.

Let’s say $a_i$s denote the variables in the left child of the root and $b_i$s denote the variables in the right child of the root. The following diagram demonstrates the general principle of how the $a_i$s, $b_i$s, and $r_i$s may be related via one specific example. If at least three $a_i$s are true and at least two $b_i$s are true, that means that at least five $r_i$s must also be true. Moreover, if the variables which are immediately to the right of $a_3$ and $b_2$ are both false then the variable immediately to the right of $r_5$ must also be false (since if no more than three $a_i$s are true and no more than two $b_i$s are true, then no more than five $r_i$s can be true). Thus, the fact of $3+2=5$ yields the two clauses $(a_3\land b_2)\rightarrow r_5$ and $(\lnot a_4\land\lnot b_3)\rightarrow\lnot r_6$.

Of course, the same general principle can be used to relate all the variables throughout the entire tree. Say there are $k$ variables in both child nodes for one particular internal node. Then for each solution of $\alpha+\beta=\sigma$ with $0\leq\alpha\leq k$ and $0\leq\beta\leq k$, one needs the clauses $(a_\alpha\land b_\beta)\rightarrow r_\sigma$ and $(\lnot a_{\alpha+1}\land\lnot b_{\beta+1})\rightarrow\lnot r_{\sigma+1}$. Note that in these clauses one naturally defines $a_0$, $b_0$, and $r_0$ to be true and $a_{k+1}$, $b_{k+1}$, and $r_{2k+1}$ to be false. This causes some clauses to simplify, e.g., one would have $a_1\rightarrow r_1$ and $\lnot a_k\rightarrow\lnot r_{2k}$.

Now that the values $r_1$, $\dotsc$, $r_n$ are constrained to specify the value of $x_1+\dotsb+x_n$ in unary, we encode $(*)$ by setting the lowest $l$ values of the output variables to true and similarly by setting the highest $u$ values of the output variables to false. An example of this is shown in the following diagram.

Size of the encoding

A natural question now arises: how large is the encoding that is produced? The original paper of Bailleux and Boufkhad points out that the encoding uses $O(n\log n)$ variables and $O(n^2)$ clauses. They also mention that when $u$ is small the encoding size can be decreased via unit propagation. Indeed, after applying Boolean constraint propagation (BCP) to the encoding just described all linking variables in the internal nodes that have indices strictly larger than $u$ will be assigned to false. This obviously results in a smaller set of clauses (since once the values of some variables have been fixed, some clauses become automatically satisfied and can be removed). Surprisingly, I was not able to find any source giving a better bound than $O(n^2)$ on the number of clauses after this simplification process. In the rest of this post I’ll show that the number of clauses is in fact $O(nu)$.

First, let’s review the argument that the number of clauses is $O(n^2)$. For simplicity, suppose that $n$ is a power of two so that the tree of linking variables will be a perfect binary tree of height $\log_2 n = \lg n$. Level $i$ will contain $2^i$ nodes with each node containing $2^{\lg n-i}=n/2^i$ variables. On level $i$, two clauses will be generated for each possible selection of $\alpha$ and $\beta$ in the range $\{0,\dotsc,n/2^{i+1}\}$. Since there are $(n/2^{i+1}+1)^2\leq (n/2^i)^2$ selections, the total number of clauses generated in the encoding will be at most

\[ 2\sum_{i=0}^{\lg n-1}2^i\frac{n^2}{4^i} = 2n^2\frac{1-(1/2)^{\lg n}}{1-1/2} = 4n^2(1-1/n) = O(n^2) . \]

As mentioned, when $u$ (or $n-l$) is small in comparison to $n$, the number of clauses after simplification with BCP will be smaller than this. We’ll now improve on this analysis to show that the number of clauses will be $O(nu)$, and a similar argument will show that the number of clauses is also $O(n(n-l))$.

For simplicity, we’ll assume both $n$ and $u$ are powers of two. Following BCP, there will be $u$ variables remaining in each of the nodes in the first $\lg n-\lg u+1$ levels of the binary tree. Therefore each node in the first $\lg n-\lg u$ levels of the tree will generate at most $2(u+1)^2\leq8u^2$ clauses. Since level $i$ contains $2^i$ nodes, the first $\lg n-\lg u$ levels will contribute a total of at most

\[ 8u^2\sum_{i=0}^{\lg n-\lg u-1} 2^i = 8u^2(2^{\lg n-\lg u}-1) = 8u^2(n/u-1) = O(nu) \]

clauses. When $i\geq\lg n-\lg u$, the nodes on level $i$ contain $n/2^i=u/2^{i-(\lg n-\lg u)}$ variables and there will be $2^i$ of them. Similar to the above argument, each node will result in $(n/2^{i+1}+1)^2\leq u^2/4^{i-(\lg n-\lg u)}$ clauses. We’ll define $j=i-(\lg n-\lg u)$, so that taking $0\leq j<\lg u$ will cause $i$ to range over the final $\lg u$ level indices. Then the final $\lg u$ levels contribute a total of at most

\[ 2\sum_{j=0}^{\lg u-1}2^{j+\lg n-\lg u}\frac{u^2}{4^j} = 2nu\frac{1-(1/2)^{\lg u}}{1-1/2} = 4nu(1-1/u) = O(nu) \]

clauses. Thus, following BCP the totalizer encoding uses $O(nu)$ clauses.

Removing more clauses

The description above is an intuitive form of the encoding, but not all clauses in the encoding just described are strictly necessary. For example, recall that to enforce that $3\leq x_1+\dotsb+x_8\leq 6$ the three middle variables $r_4$, $r_5$, and $r_6$ were not initialized to either true or false. We don’t care which way those variables are assigned in the end, and the clauses which involve those variables can simply be dropped. In general, it is sufficient to only use the lowest $l$ variables and the highest $n-u$ variables from every node, i.e., for a node containing $k$ variables $\{a_1,\dotsc,a_k\}$ with $k\geq\max(l,n-u)$, we only need to use the variables $\{a_1, \dotsc, a_l\}$ and $\{a_{k-(n-u)+1}, \dotsc, a_k\}$ (note that these two sets may overlap). In this case, a similar argument to the one above gives us that the encoding uses $O(n(l+n-u))$ clauses which is better when both $l$ and $n-u$ are small.

Moreover, we can drop some biconditional constraints and make them unidirectional instead. For example, let’s say we want to ensure the lower bound in $(*)$. Suppose that $\{a_1,\dotsc,a_k\}$ are the unary counter variables associated with some subtree $A$. In this case it is not necessary to require that the variable $a_l$ is true if and only if $l$ input variables in in $A$ are true—it is sufficient to require that $a_l$ being true implies that $l$ input variables in $A$ are true. So, if $a_l$ is false it may still be the case that $l$ input variables in $A$ are true (this is an acceptable situation assuming our only purpose is to guarantee that the lower bound in $(*)$ is satisfied). In a similar way, to ensure the upper bound in $(*)$, it is only necessary to know that $a_{k-(n-u)+1}$ being false implies that $k-(n-u)+1$ input variables in $A$ are false (but $a_{k-(n-u)+1}$ may be true while $k-(n-u)+1$ input variables in $A$ are false).

This cuts the number of remaining clauses approximately in half. Say there are $k/2$ variables in both child nodes for one particular internal node. Then for each solution of $\alpha+\beta=\sigma$ with $0\leq\alpha\leq k/2$ and $0\leq\beta\leq k/2$, one only needs the clause $(a_\alpha\land b_\beta)\rightarrow r_\sigma$ when $\sigma\geq k-(n-u)+1$, and one only needs the clause $(\lnot a_{\alpha+1}\land\lnot b_{\beta+1})\rightarrow\lnot r_{\sigma+1}$ when $\sigma+1\leq l$.

In practice, it is unclear if only using unidirectional clauses is preferable to using bidirectional clauses or not; it seems to depend on the problem. Ed Wynn calls the biconditional encoding the “strengthened” encoding and has compiled a nice experimental analysis including both of these encodings and more. Results for the totalizer encoding are given in Figure 9, and he comments:

The effect of strengthening each encoding is small […] the lack of effect is much more surprising, because strengthening requires a substantial increase in the number of clauses for each encoding.

All other things equal, a smaller number of clauses is typically preferred so that the solver can keep fewer clauses in memory during the solving process. So, the fact that the bidirectional encoding has more clauses but isn’t always slower seems to indicate that the solver can find the extra clauses in the strengthened encoding useful, at least sometimes.

In fact, even more clauses can be removed—really, in order to enforce $(*)$ we only need to require that $r_l$ is true and $r_{u+1}$ is false, and all clauses involving $r_1$, $\dotsc$, $r_{l-1}$ and $r_{u+2}$, $\dotsc$, $r_n$ can simply be ignored. At first this might seem harmful—for example, we lose the desirable property that internal node variables with indices strictly larger than $u$ get assigned false by BCP. However, supposing that only unidirectional clauses were used, if there are any variables that would have been removed by BCP but are no longer (e.g., the left child of the root’s variable $a_k$ with $n/2\geq k\geq u+2$) then those variables will now only appear in the clauses in a single polarity. Modern SAT solvers will perform pure variable elimination to automatically remove clauses containing these variables which essentially means it automatically fixes the values of those variables, similar to BCP. So in this case we still have the $O(nu)$ clause bound, but also any clause containing any of $r_{u+2}$, $\dotsc$, $r_n$ in a positive polarity (e.g., $\lnot a_{n/2}\lor\lnot b_{n/2}\lor r_n$) doesn’t even appear in the encoding anymore.

A comment on Knuth’s description

I also just noticed something that I missed the first time I read Knuth’s description of the totalizer encoding in TAOCP: there is a small difference between the original Bailleux–Boufkhad encoding and the encoding that Knuth attributes to them in TAOCP 4B.

Bailleux and Boufkhad follow what I described above and use a “balanced” tree in their original paper: for every node they balance the number of leaves contained in the left and right subtrees as much as possible. Instead, Knuth describes an approach which uses a complete binary tree (i.e., a tree where every level except possibly the last is filled and all nodes in the last level are as far left as possible). Although the difference is not a major one, a complete tree can have nodes whose left and right subtrees have an arbitrarily large difference in the number of leaves that they contain.

For example, say $n=2^k+2^{k-1}$. Then the root of a complete tree with $n$ leaves has $2^{k-1}$ leaves in its right subtree but $2^{k}$ leaves in its left subtree. Intuitively, I would expect the original balanced encoding to be preferable, though the difference may not be significant given that most nodes in a complete tree will satisfy the balancing property. For example, when $n=2^k+2^{k-1}$ even though the root node has a severe imbalance, every other node in the tree is perfectly balanced. Although I didn’t perform an extensive comparison, I ran some tests using a complete tree encoding and compared it with a balanced tree encoding and neither method was clearly better. On the instances I tried, both methods had similar performance and both methods were faster than the other on some instances.

Personally I would be more comfortable calling using a complete binary tree a variant of the original encoding. Though, seemingly the reason Knuth used a complete tree in the first place was to in order to provide a very nice and concise description of the clauses (because in a complete binary tree there is an easy-to-state mapping from parent to children nodes and vice versa). Is modifying the encoding itself in order to simplify part of its description a reasonable tradeoff? That does sound less-than-ideal to me, unless it is truly the case that both encodings perform just as well as each other—in that case you might as well just follow the simpler description.

Incidentally, even if this doesn’t meet Knuth’s standard of an “error”, I’m proud to say already once found a 48-year-old typo in TAOCP vol. 2 and was sent a Knuth reward check. 🙂